Mindful Garden: Supporting Reflection on Biosignals in a Co-Located Augmented Reality Mindfulness Experience

https://dl.acm.org/doi/10.1145/3500868.3559708

In CSCW Demo ’22, X–X, 2022, Virtual, Taipei. ACM, New York, NY, USA, 6 pages.

© 2022 Association for Computing Machinery. Manuscript submitted to ACM

We contribute Mindful Garden, an Augmented Reality Lens for Co-Located Mindfulness. HCI research has increasingly supported designing technology to support mindfulness. Augmented reality and sensors which detect biosignals both have the potential to support creating mindful experiences, by transforming people’s environments into more relaxing spaces and offering some feedback to help people make sense of their physio-psychological states. We will demo Mindful Garden, a system for supporting reflection on biosignals in a mindfulness experience where two people are physically co-located. Mindful Garden has one person guide the other through meditation, representing the guided individual’s biosignals as flowers in a shared augmented reality environment. We leverage Snap Spectacles and a Muse 2 headband, showing the promise of ready-to-use consumer technologies for the purpose of mindfulness and well-being. To overcome technical limitations in accessing, our demo illustrates a novel pipeline for real-time biomarker data streaming from the Muse 2 to the AR lens in the Snap Spectacles.

CCS Concepts: • Human-centered computing → Mixed / augmented reality; Collaborative and social computing devices.

Additional Key Words and Phrases: mindfulness, augmented reality, co-location, biosignals, brainwaves

1 INTRODUCTION

Mindfulness is part of ancient Buddhist and Hindu meditation traditions, focusing on a deep exploration of the mind, starting with a focus on breathing and awareness of bodily states. [5]. Congruent to this definition is the pragmatic application of mindfulness as a therapy, Mindfulness-Based Stress Reduction (MBSR), aiming to relax, reduce stress, increase attention, or recover from trauma [14]. Although regular mindfulness training is associated with greater mental and emotional health [1], co-located mindfulness meditation can lead to greater connectedness, stronger peer support and increased social skills. Among technical approaches to supporting co-located mindfulness, Augmented Reality (AR) offers new forms of social interaction to visually present biofeedback within an environment where people are meditating. HCI researchers of mindfulness have experimented with tracking and using real-time biofeedback to enhance interactions and create more enriching experiences [8]. When coupled with biofeedback, mindfulness technology has the potential to support self-observation, allowing people to better make sense of their physio-psychological states.

Leveraging the opportunity to apply AR to co-located mindfulness, we present Mindful Garden, a wearable prototype for co-located social mindfulness experiences, collecting and sharing biosignals indicative of relaxation and concentration from EEG data collected from a wearable headset. We use the Muse 2 headband to collect electroencephalography (EEG) data to observe three aspects of the participant’s mind states: concentration, relaxation and fatigue. Mindful Garden uses EEG biomarkers as inputs and maps them to the Snap Spectacles as animations of a flower. These animations allow people to immersively interact with their biomarker data, potentially leading to a deeper understanding of mindful states. To overcome technical limitations around data transfer to the Augmented Reality environment, we also developed a novel pipeline for integrating biosignals data into an augmented reality display. We process the collected EEG data into higher-level biosignals, transmitting them through inaudible sound frequencies to the microphone embedded in the augmented reality eyeglasses. Our pipeline then processes the signal, adjusting the environment to account for the biofeedback. More broadly, this research seeks to answer how sensed biosignals data can be used to create shared mindfulness experiences and what features, functions, and designs are meaningful to end users.

2 RELATED WORK

The design choices in Mindful Garden build on principles of mindfulness, technical strategies used to support mindfulness, and integration of sensed data and mixed reality.

2.1 Mindfulness

Mindfulness can be traced to the fifth-century text Visuddahimagga, that guides the mind to meditative states and the progression to peace. Its modern adaptation is commonly interpreted as a sequence of mind activities which start with a focus on an object or a sensation, followed by awareness of our bodily states and the mind dance between full focus and aimless wandering[5]. Mindfulness also refers to an open awareness of what happens in mind and experiences without judging or reacting. The pragmatic application of mindfulness is commonly taught as a self-regulation tool to help people cope with stress and anxiety[14]. By training focus and awareness, mindfulness meditation can also promote a mindset and a practical construct towards human flourishing, which overlaps with positive psychology [3].

Cultivating positive prosocial emotion in mindfulness meditation is at least as important as recognizing and reducing one’s own negative feeling, if not more. In Buddhism, mindful meditation traditionally has been taught in groups. In psychotherapy, while most mental health clinicians implement therapy on an individual basis, mindfulness-based therapy is mostly delivered in a group setting to save cost [6]. While solitary mindfulness training is associated with greater mental and emotional health [23], watching others meditate may help induce a state of mindfulness and strengthen feelings of social connectivity [7]. Being mindful together can help people communicate with one another, learn from others’ insights, and receive assistance with isolation, particularly among people in close relationships [6].

2.2 Technology Support for Mindfulness

Within HCI and CSCW, systems for interactive mindfulness meditation have become more prevalent [16]. Moreover, mindfulness has been a frequent goal of HCI systems aiming to support affective health [18]. Researchers including Dollinger et al. have argued that mixed reality devices provide significant opportunities for supporting mindfulness activities, including to surface bodily or mental states using biofeedback [21]. The multimodal nature of mixed reality systems, such as their visual design, immersive interactions, haptic feedback, virtual environments, and biofeedback mechanisms, offer a plethora of opportunities to create valuable interventions and experiences [16].

However, there are gaps in the existing systems worth exploring. First, Dollinger et al.’s review on technology for mindfulness suggests that while VR has been examined, very few systems focus on AR [4]. Second, AR technologies have not examined AR eyewear systems. Third, mindfulness interactions are almost exclusively considered as an individual practice. Fourth, current research has supported “passive” rather than “active” mindfulness activities, and therefore interactions in mixed reality systems are generally passive [15]. Such a passive system “only leads to a limited enhancement of mindfulness compared to conventional guided meditation tasks” [4]. We therefore see opportunity to examine the immersive, interactive, contextually aware (via biosignals), and social mindfulness activities in AR.

2.3 Sensed Data and the Potential of Mixed Reality for Mindfulness

Technology frequently leverages sensed data metrics like breathing rate, heart rate, and EEG to offer targets and guidance for meditation. EEG neurofeedback has potential to increase user’s performance during mindfulness meditation by assisting meditators in staying in the mindful state [8]. Providing technological support during initial meditation attempts could also increase meditator’s performance, modify perceptions that meditation involves doing nothing [8], and thus increase interest in continuing practice. Further, sensed data have increasingly been incorporated into mixed-reality devices to support similar types of experiences. Mixed-reality systems have adapted digital environments according to sensed biomarkers to support formal mindfulness practices [10, 17, 19]. However, current limitations in AR eyewear software and hardware make it difficult to integrate biosignals. In particular, consumer-grade AR eyewear provides limited support for integrating biosensing data collected from external devices into AR displays.

3 DESIGN AND IMPLEMENTATION OF THE MINDFUL GARDEN SYSTEM

3.1 Mindfulness Experience Design

We implemented the mindfulness technique of breath counting in Mindful Garden because of its association with body relaxation and low cognitive load [12]. Two users equip Muse 2 and Spectacles headsets to join the AR environment. The system randomly chooses one user to guide the other through the practice. The guided user is told to start the practice with a focused attention on the breath, inhale, hold the breath, exhale and repeat the process. The two users take turns reading the meditation script, with a full mindfulness session lasting five minutes.

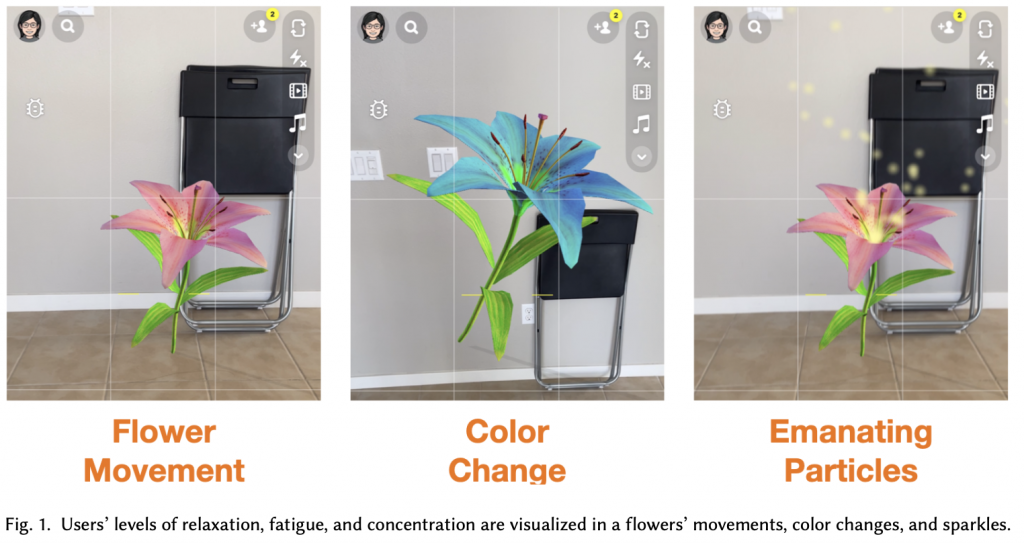

To engage the collocated meditation partner with the biosignal data, we modified an object in the Snap Lens application according to biosignal data changes of the person being guided. We use a flower to represent the data because naturalistic representation of mental state may help users experience mindfulness [13]. Both users can see the flower visually change, and after each session they can review how the flower model changed over the exercise. The flower’s movements, color, and some sparkles reflect the user’s levels of three mental states correlated with mindfulness: relaxation, fatigue, respectively (Figure 1). Concentration was chosen as the primary state mindfulness indicator because the concept is a commonly operationalized definition of mindfulness[11]. Concentration data aims to encouraging a more rapid return to mindful states. Subsequently, we chose relaxation and fatigue as secondary indicators, as this data is found in traditional mindfulness practices. We kept visual changes of the flower subtle to balance between presenting the flower as a rich environmental stimulus for surfacing biosignals while minimizing distraction.

3.2 System Implementation

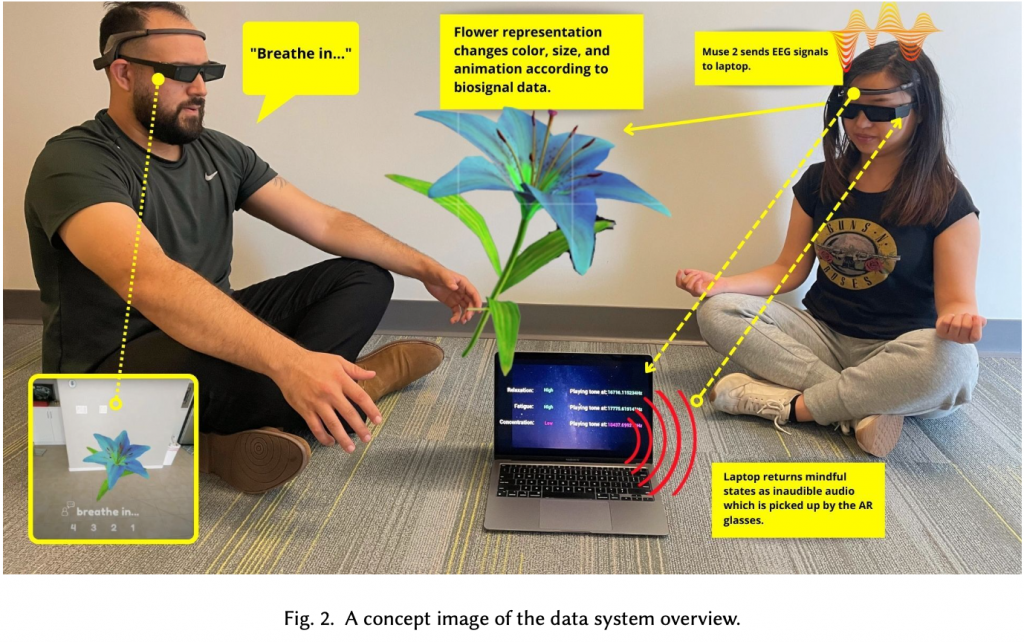

When using Mindful Garden, both users wear the Muse 2 headband [8] for data collecting and the Snap Spectacles for biofeedback. A computer installed with Unreal Engine is placed in the physical space and is used for generating and mapping sound. Once the guided user starts meditating, EEG data is collected through the Muse and streamed to the laptop for processing. Processed data is sent to the Spectacles as inaudible sound clips, which can be recognized by the device’s Audio Analyzer module. Figure 2 provides an overview of our system.

EEG data within frequency band ranges can correlates with particular mindful states [20]. We process and calculate amplitudes in 𝛼, 𝜃,and 𝛽 frequency bands, as well as 𝛽/𝜃 band power and (𝜃 + 𝛼)/𝛽 band power before sending the signals to Unreal. 𝛼 band power is used as an index of degree of relaxation [2]. 𝛽/𝜃 is an index of mental activity and concentration [22], with higher beta/theta being interpreted as a higher level of concentration. (𝜃 + 𝛼)/𝛽 is an index of degree of fatigue [9]. Because the Snap Lens Studio does not currently support external API connection, we developed an alternative pipeline for loading the data into the Lens. We turned to map the processed data to sound clips in an inaudible frequency band in Unreal SynthComponent module, which can be received by the Snap Spectacles. Our approach takes a combination of processed EEG and ratio indices, and uses them to determine a set of lexicon depicting

“high”, “middle”, “low” levels during each short meditation session. Each lexicon is then generated as an audio clip at a defined frequency that could be captured by the Spectacles through Snap App’s Audio Analyzer module. As we aim to minimize the real-time streaming audio’s influence on the meditation, we chose frequencies that were inaudible to human hearing, selecting 17500, 18000, 18500 Hz to represent low, mid, and high levels. As the amplitude of each EEG frequency band changes during the meditation session, the audio clips being generated for data communication also change accordingly. While listening to the data stream in Audio Analyzer, the flower model responds to the changes directly in real time which could be visible in the AR environment. The Audio Analyzer then drives different visualizations (movement, color, particles) based on the power of the audio signal in pre-defined frequency ranges.

4 LIMITATIONS

We encountered several technical limitations implementing Mindful Garden. First, the system is sensitive to noise in the physical environment as it relies on an audio channel to transfer data from the sensor to AR.Second, data inaccuracies are unavoidably produced through processing brainwaves and transmitting data through sound, and interpreting the signal. Last, the system requires users to have multiple devices, which limits who can access the experience. In addition to technical limitations, the design of Mindful Garden warrants deeper exploration. First, visualizing abstract biosignals can help interpretation, but people may interpret the visualizations differently. Preliminary evaluations suggest that people relate different emotional feelings from the same color, suggesting value in allowing visual representation be customized to align with people’s personal interpretation. Additionally, social interaction and visualization might be a distraction during meditation, and surfacing of biosignals may further social concern around co-located mindfulness.

5 CONCLUSION

We present Mindful Garden, a wearable Augmented Reality prototype for co-located social mindfulness. Mindful Garden transmits real-time EEG data captured by Muse 2 to the Snap Spectacles, visualizes and shares the data during co-located mindfulness in an AR garden. This information allows for understanding psychological states and engaging with biosignals in ways connected with the physical world. Our co-located AR experience can enhance feelings of connectedness or relatedness, allowing meditation partners to provide and receive emotional and mental support.

6 ACKNOWLEDGMENTS

Thanks to Tim Chong, Erica Cruz, Jennifer He, Melissa Powers, Samantha Reig, Ava Robinson, Yu Jiang Tham, and Rajan Vaish for their help with the initial conceptualization and implementation of the Mindful Garden lens. This work was supported in part by the Snap Creative Challenge on the Future of Co-located Social Augmented Reality.

REFERENCES

- [1] Albert Bandura. 1991. Social cognitive theory of self-regulation. Organizational behavior and human decision processes 50, 2 (1991), 248–287.

- [2] O.M. Bazanova and D. Vernon. 2014. Interpreting EEG alpha activity. Neuroscience Biobehavioral Reviews 44 (2014), 94–110. https://doi.org/10.1016/j.neubiorev.2013.05.007 Applied Neuroscience: Models, methods, theories, reviews. A Society of Applied Neuroscience (SAN) special issue.

- [3] Roger Bretherton. 2017. Itai Ivtzan and Tim Lomas (eds.): Mindfulness in Positive Psychology: The Science of Meditation and Wellbeing. Routledge,London, UK, 2016, 348 pp. Mindfulness 8, 1 (Feb. 2017), 254–256. https://doi.org/10.1007/s12671-016-0667-9

- [4] Nina Döllinger, Carolin Wienrich, and Marc Erich Latoschik. 2021. Challenges and Opportunities of Immersive Technologies for MindfulnessMeditation: A Systematic Review. Frontiers in Virtual Reality 2 (2021), 29.

- [5] Daniel Goleman and Richard J. Davidson. 2017. Altered traits: science reveals how meditation changes your mind, brain, and body. Avery, New York.OCLC: ocn971949235.

- [6] Melbourne Academic Mindfulness Interest Group and Melbourne Academic Mindfulness Interest Group. 2006. Mindfulness-Based Psychotherapies:A Review of Conceptual Foundations, Empirical Evidence and Practical Considerations. Australian & New Zealand Journal of Psychiatry 40, 4 (2006),285–294. https://doi.org/10.1080/j.1440-1614.2006.01794.x arXiv:https://doi.org/10.1080/j.1440-1614.2006.01794.x PMID: 16620310.

- [7] AdamW.Hanley,VincentDehili,DeidreKrzanowski,DanielaBarou,NatalieLecy,andEricL.Garland.2021.EffectsofVideo-GuidedGroupvs.Solitary MeditationonMindfulnessandSocialConnectivity:APilotStudy.ClinicalSocialWorkJournal(June2021),1–9. https://doi.org/10.1007/s10615-021-00812-0 MAG ID: 3176845130.

- [8] Hugh Hunkin, Daniel L. King, and Ian Zajac. 2020. EEG Neurofeedback During Focused Attention Meditation: Effects on State Mindfulnessand Meditation Experiences. Mindfulness 12, 4 (2020), 841–851. https://doi.org/10.1007/s12671-020-01541-0 MAG ID: 3101343162 S2ID:9e7d67a5f6e4c9fcf5c53620de91100a41f12a1d.

- [9] Budi Thomas Jap, Sara Lal, Peter Fischer, and Evangelos Bekiaris. 2009. Using EEG spectral components to assess algorithms for detecting fatigue.Expert Systems with Applications 36, 2, Part 1 (2009), 2352–2359. https://doi.org/10.1016/j.eswa.2007.12.043

- [10] Simo Järvelä, Benjamin Cowley, Mikko Salminen, Giulio Jacucci, Juho Hamari, and Niklas Ravaja. 2021. Augmented Virtual Reality Meditation:Shared Dyadic Biofeedback Increases Social Presence Via Respiratory Synchrony. ACM Transactions on Social Computing 4, 2 (2021), 1–19.

- [11] Daniel B. Levinson, Eli L. Stoll, Sonam D. Kindy, Hillary L. Merry, and Richard J. Davidson. 2014. A mind you can count on: validating breathcounting as a behavioral measure of mindfulness. Frontiers in Psychology 5 (2014). https://www.frontiersin.org/article/10.3389/fpsyg.2014.01202

- [12] Anna-Lena Lumma, Bethany E. Kok, and Tania Singer. 2015. Is meditation always relaxing? Investigating heart rate, heart rate variability, experienced effort and likeability during training of three types of meditation. International Journal of Psychophysiology 97, 1 (July 2015), 38–45.https://doi.org/10.1016/j.ijpsycho.2015.04.017

- [13] MaríaVNavarro-Haro,YolandaLópez-delHoyo,DanielCampos,MarshaMLinehan,HunterGHoffman,AzucenaGarcía-Palacios,MartaModrego- Alarcón, Luis Borao, and Javier García-Campayo. 2017. Meditation experts try Virtual Reality Mindfulness: A pilot study evaluation of the feasibility and acceptability of Virtual Reality to facilitate mindfulness practice in people attending a Mindfulness conference. PloS one 12, 11 (2017), e0187777.

- [14] Sharon Praissman. 2008. Mindfulness-based stress reduction: A literature review and clinician’s guide. Journal of the American Academy of Nurse Practitioners20,4(2008),212–216. https://doi.org/10.1111/j.1745-7599.2008.00306.xarXiv:https://onlinelibrary.wiley.com/doi/pdf/10.1111/j.1745- 7599.2008.00306.x

- [15] Joan Sol Roo, Renaud Gervais, Jeremy Frey, and Martin Hachet. 2017. Inner garden: Connecting inner states to a mixed reality sandbox for mindfulness. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. 1459–1470.

- [16] Kavous Salehzadeh Niksirat, Chaklam Silpasuwanchai, Mahmoud Mohamed Hussien Ahmed, Peng Cheng, and Xiangshi Ren. 2017. A framework for interactive mindfulness meditation using attention-regulation process. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. 2672–2684.

- [17] Mikko Salminen, Simo Järvelä, Antti Ruonala, Janne Timonen, Kristiina Mannermaa, Niklas Ravaja, and Giulio Jacucci. 2018. Bio-adaptive social VR to evoke affective interdependence: DYNECOM. In 23rd international conference on intelligent user interfaces. 73–77.

- [18] PedroSanches,AxelJanson,PavelKarpashevich,CamilleNadal,ChengchengQu,ClaudiaDaudénRoquet,MuhammadUmair,CharlesWindlin, Gavin Doherty, Kristina Höök, et al. 2019. HCI and Affective Health: Taking stock of a decade of studies and charting future research directions. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. 1–17.

- [19] EkaterinaRStepanova,JohnDesnoyers-Stewart,PhilippePasquier,andBernhardERiecke.2020.JeL:Breathingtogethertoconnectwithothersand nature. In Proceedings of the 2020 ACM Designing Interactive Systems Conference. 641–654.

- [20] Michal Teplan et al. 2002. Fundamentals of EEG measurement. Measurement science review 2, 2 (2002), 1–11.

- [21] Nađa Terzimehić, Renate Häuslschmid, Heinrich Hussmann, and mc schraefel. 2019. A Review & Analysis of Mindfulness Research in HCI: FramingCurrent Lines of Research and Future Opportunities. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. 1–13.

- [22] Dana van Son, Frances M. De Blasio, Jack S. Fogarty, Angelos Angelidis, Robert J. Barry, and Peter Putman. 2019. Frontal EEG theta/beta ratioduring mind wandering episodes. Biological Psychology 140 (2019), 19–27. https://doi.org/10.1016/j.biopsycho.2018.11.003

- [23] Helané Wahbeh, Matthew N. Svalina, Matthew N. Svalina, and Barry Oken. 2014. Group, One-on-One, or Internet? Preferences for Mindfulness MeditationDeliveryFormatandtheirPredictors.Openmedicinejournal1,1(Nov.2014),66–74. https://doi.org/10.2174/1874220301401010066MAG

ID: 2033588586.